A reader writes:

After reading "Welcome to my museum", I'm now fascinated by the power supply equipment used on early Cray supercomputers. Can you explain more about the Motor-Generator Unit, and where you found the information? There doesn't seem to be much literature about it on the interwebs.

Colin

I found out about the extraordinary supporting equipment the Cray-1 needed in the "Cray-1 Computer Systems M Series Site Planning Reference Manual HR-0065", dated April 1983, which you can get in PDF format here.

I think I originally found that manual in the Bitsavers PDF Document Archive, here. They've got a bunch of other old Cray documentation in this directory, including document HR-0031, the manual for the optional Cray-1/X-MP Solid-State Storage Device (SSD).

You could very easily mistake that device for a modern SSD, except for minor details like how it had a maximum capacity of 256 megabytes, and was larger and heavier than some cows. I'm not sure quite how much larger and heavier, though, because that's covered by document HR-0025, which unfortunately doesn't seem to be online anywhere.

(The top-spec 256Mb version of the SSD did have a 1250-megabyte-per-second transfer rate, though, more than double the speed of the fastest PC SSDs as I write this. The Cray SSD's main purpose was apparently to serve as a fast buffer between the supercomputer's main memory and its relatively slow storage. Traditional supercomputers, as I've written before, were always more about I/O bandwidth than sheer computational power.)

The Site Planning Reference Manual is sort of a tour rider for a computer. Van Halen's famous rider had that thing about brown M&Ms in it as a test to see whether people at the venue had read the rider, and were thus aware that they needed to provide not only selected colours of confectionery, but also a strong enough stage and a big enough power supply. I presume the Site Planning Manual has in it somewhere a requirement that there be an orange bunny rabbit painted on one corner of the raised flooring.

(At this point I have to mention Iggy Pop's rider as well, not because it's at all relevant to the current discussion, but because it's very funny.)

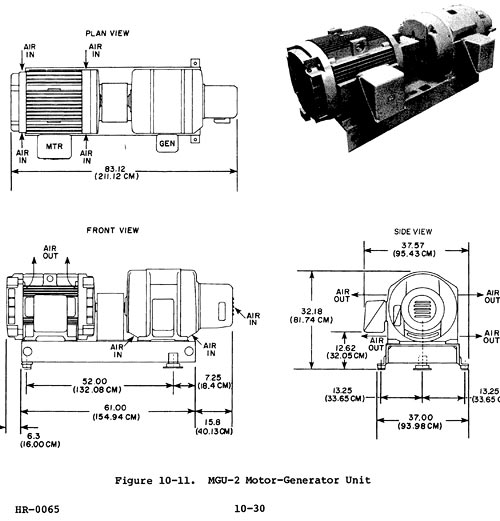

I think the deal with the Motor-Generator Unit was that the Cray 1 needed not just enormous amounts of power (over a hundred kilowatts!), but also very stable power. So it ran from a huge electric generator connected directly to a huge electric motor, the motor running from dirty grid power and the generator, in turn, feeding the computer's own multi-voltage PSU. The Cray 1 itself weighed a mere 2.4 tonnes, but all this support stuff added several more tonnes.

(My copy of the HR-0065 manual is over on dansdata.com, hosted by m'verygoodfriends at SecureWebs, who in their continuing laudable attempts to wall off IP ranges corresponding to the cesspits of the Internet occasionally accidentally block traffic from some innocent sources, like an Australian ISP or two. If you can't get the file there, you can of course go to Bitsavers instead, or try this version, via Coral. You can use Coral to browse the whole of Dan's Data if SecureWebs isn't playing ball, though it may be a few hours out of date.)

15 June 2012 at 6:33 pm

There's only one valid reason to buy an early Cray:

To use as a sofa!

We had one at my university - not the world most comfortable piece of furniture, but surely the most iconic!

17 June 2012 at 8:34 am

Yeah - the abovementioned SSD was built to be another slice of the Cray-1 pie, complete with upholstery. I don't know how many components you needed to get the full circle.

It's kind of odd that the machine's power-purity demands were such that a motor-generator rig was necessary to keep it properly fed (and that it needed complex expensive systems just to run the other complex expensive systems that actually did the computing and storage), but people SITTING on the supercomputer were not a problem!

19 June 2012 at 12:30 am

The thing is mechanically processing a hundred kilowatts. The mechanical strain of someone sitting on it is not exactly going to matter much... as for the rest of the machine, when it's so expensive anyway, why not build a sturdy enough case to withstand all reasonable insults? It's one less thing to debug...

15 June 2012 at 10:49 pm

Why did they have plexiglass sides on some of the Cray computers?

So you could "see more Cray."

Hahah!

15 June 2012 at 11:24 pm

I've built M-G sets but cheap inverter power supplies are making them less popular. The main use is to ensure "clean" power, free from transient voltage dips and spikes but they can be combined with a flywheel to produce a UPS effect. We also made M-G sets for ships that need to convert frequency from (say) 50 Hz to 60 Hz.

16 June 2012 at 3:29 am

I seem to recall they were also very handy for working with old WWII aircraft electronics (radios and the like) which were designed to work with 400 Hz AC in the interest of reducing the weight of the transformers.

I seriously considered building an M-G set when I realized I needed a few hundred amperes at a few millivolts to drive an MHD desk toy, but that would have defeated the no-moving-parts idea behind the toy in the first place. They're still used for some pulsed-power supplies, storing loads of energy in a big flywheel which you then turn around and use as a homopolar generator to produce massive currents for short pulses.

18 June 2012 at 12:05 pm

First of all, there's exactly one hit on google for "MHD desk toy", and it's this page.

Secondly, I WANT ONE!!!! Where do I get one?!?!?!

--Me

19 June 2012 at 12:34 am

Still working on the construction. The permanent-magnet version was a bust; now I'm looking at an induction-motor-based design. And galinstan's not a great medium, so I'm thinking it might have to be mercury. (Not quite mad enough to consider NaK. Well, not seriously. But it has that lovely low density...)

16 June 2012 at 3:10 am

You link to the article at http://www.dansdata.com/gz017.htm that you wrote 10 years ago about comparing supercomputers to graphics chips. It really doesn't hold up so well anymore. More and more supercomputers are becoming racks and racks of nearly commodity hardware (or for certain classes of problems, systems designed to pack as many graphics chips as possible into the smallest amount of space) with really impressive network interconnects and specialized operating systems underneath optimized to use this.

That's not to say that they don't do _anything_ special. More and more you have supercomputers optimized for different problem sets. I'm especially impressed with things like routing one of the memory cache coherency buses (http://en.wikipedia.org/wiki/Cache_coherence a modern server processor is likely to have several of these buses) out to a switched network. This way you can have thousands of motherboards each with their own RAM and processors all _actually_ seeing one coherent flat memory space with many TB of RAM rather than trying to emulate that in software.

16 June 2012 at 3:38 am

Well, the supercomputer we have upstairs from the lab is just a pile-of-rackmount-PCs with modest IO, and the IO is the biggest pain in operating the thing. You're constantly fighting to have the right data on the right machine, which limits the interchangeability of the nodes, which drastically increases the PITA factor.

For contrast, the machine we use to dedisperse incoming pulsar signals was designed with IO in mind, even though 10GigE interfaces on either end plus PCI to communicate with the heap of GPUs is plenty. (Oh, and it has an FPGA on one end.)

For another example, the IBM BlueGene supercomputer driving LOFAR (a low-frequency radio telescope spanning Europe) has a very simple task: compute all the pairwise products of the datastreams from tens or hundreds of tiles. Here there's practically no computations (okay, there's some FFTing to channelize it, but the heavy lifting is really just multiplying two data streams) but IO is a massive concern.

So no, I think I'd say that IO is still the central concern for many supercomputers. In fact, I'd argue that's what distinguishes an individual supercomputer from something like Einstein@Home or BOINC - the latter have more flops, and are suitable for some tasks (finding pulsars, for example) but just don't have the IO to do some things. For those we need lots of flops glued together with loads of IO, a configuration we call "supercomputer".

16 June 2012 at 6:39 am

I never said that IO wasn't the biggest concern with respect to super computers, but as you point out, in many cases a supercomputer is just a room full of modern PCs. The biggest difference in these cases is that there's lots more money thrown at the network infrastructure and that it's optimized differently. But it's still just commodity hardware. Even when you have multiple 10Gb/s interconnects between nodes, it is nowhere near the memory bandwidth of any of the processors (primary RAM has nowhere near the memory bandwidth of the processors).

But it used to be a hallmark of supercomputers that they were able to keep the processor fed with data from RAM. This is no longer the case since commodity CPUs are now faster than any RAM subsystem you can build for any "reasonable" sum of money and what this extra money buys you isn't much more than what you'll find in a typical server room.

There _are_ still specialized supercomputer systems though, but even with those specializations, most still aren't _that_ different from commodity hardware.

29 June 2012 at 6:07 am

Another early SSD, probably the same era, roughly, was the DEC ESE-20 (if I remember the number right). Nothing as fancy as the Cray Pie-Slice Sofa Bit (if that one developed Bit Rot, would your data then be lost down the back of the sofa?), but a mundane one-meter high 19" cabinet, offering you 120MB of rather fast storage. The guts of the unit were a MicroVax II with Lotsa Memory running VAXELN, a RD54 disk drive, and a hefty battery. On loss of power the memory contents would be written to the disk, to be restored when the unit got back into operating mode. This would take nearly an hour either way, or infinitely long if the RD54 refused to play ball after the previous era of inactivity or the battery had lost its propensity to push electrons around.

In my life as a DEC FS tech, I've seen only one in the field.