A fundamental duality of software engineering

A couple of weeks ago I proposed a small quiz. (I also stated that the answer would come “on Wednesday” — please understand any such promise as “whenever I find the time”. Sorry.) Here is the answer.

The quiz was:

I have a function:

- For 0 it yields 0.

- For 1 it yields 1.

- For 2 it yields 4.

- For 3 it yields 9.

- For 4 it yields 16.

What is the value for 5?

Shortly thereafter I added a hint: the value for 5 is 25, and changed the question to: “What is the value for 6?”. For good measure we can also ask about the value for 1000. Now compare your answer to what follows.

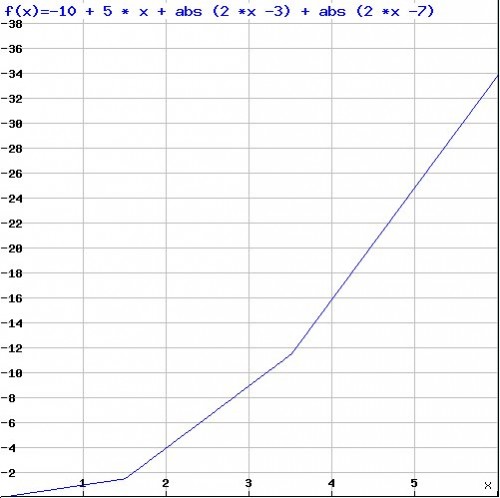

A good answer for the value at 6 is: 34 . The function in this case is -10 + 5 x + |2 x – 3| + |2 x -7|. It matches the values for the given inputs.

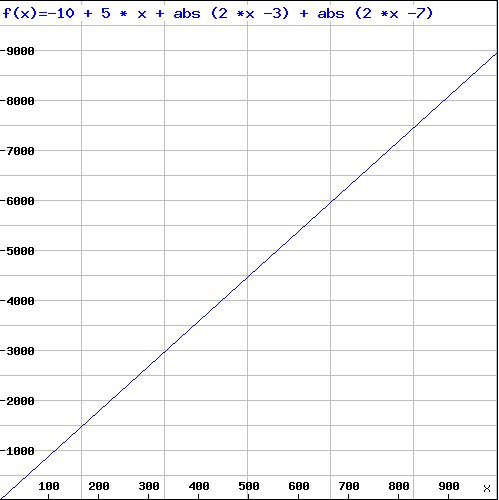

The value for 1000 is 8980:

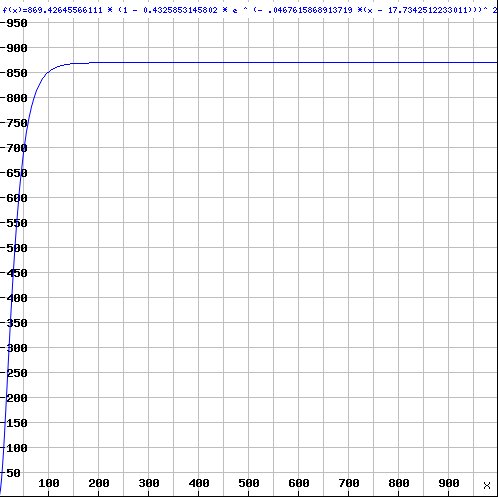

Another good answer at position 6 is 35.6. It comes up if we assume the function is over reals rather than integers; then a possible formula, which correlates very well (R-square of 0.9997) with the values at the given inputs, is:

869.42645566111 (1 – 0.4325853145802 e-.0467615868913719 (x – 17.7342512233011))2.3116827277657443

with a quite different asymptotic behavior, giving the value 869.4 at position 1000:

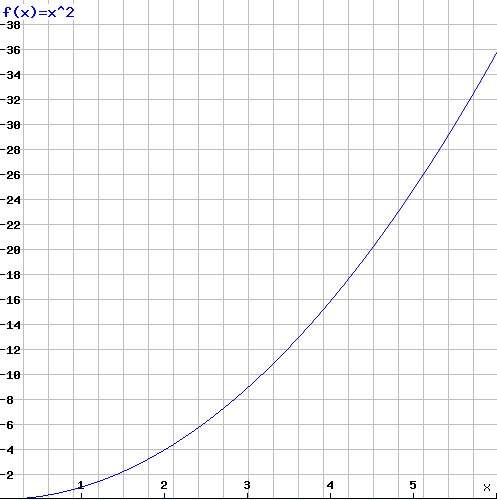

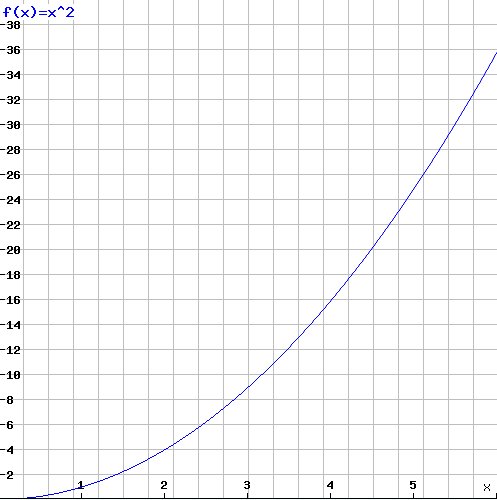

Some readers might have thought of another possibility, the square function x2, which again matches all the given values:

So which of these answers is right? Each is as good as the others, and as bad. There is in particular no reason to believe that the values given in the quiz’s statement suggest the square function. Any function that fits the given values, exactly (if we stick to integers) or approximately (with reals as simulated on a computer) is an equally worthy candidate. Six inputs, or six thousand, do not resolve the question. At best they are hints.

This difference between a hint and a solution is at the core of software engineering. It is, for example, the difference between a test and a specification. A test tells us that the program works for some values; as Dijkstra famously pointed out, and anyone who has developed a serious program has experienced, it does not tell us that it will work for others. The more successful tests, the more hints; but they are still only hints. I have always wondered whether Dijkstra was explicitly thinking of the Popperian notion of falsifiability: no number of experiments will prove a physical theory (although a careful experiment may boost the confidence in the theory, especially if competing theories fail to explain it, as the famous Eddington expedition did for relativity in 1919 [1]); but a single experiment can disprove a theory. Similarly, being told that our function’s value at 6 is 34 disqualifies the square function and the last one (the exponential), but does not guarantee that the first function (the linear combination) is the solution.

The specification-testing duality is the extension to computer science of the basic duality of logic. It starts with the elementary boolean operators: to prove a or b it suffices to establish a or to establish b; and to disprove a and b it suffices to show that a does not hold or to show that b does not hold. The other way round, to disprove a or b we have to show that a does not hold and to show that b does not hold; to prove that a and b holds, we have to show that a holds and to show that b holds.

Predicate calculus generalizes or to ∃, “there exists”, and and to ∀, “for all”. To prove ∃ x | p (x) (there is an x of which p holds) it suffices to find one value a such that p (a); let’s be pretentious and say we have “skolemized” x. To disprove∀ x | p (x) (p holds of all x) it suffices to find one value for which p does not hold.

In software engineering the corresponding duality is between proofs and tests, or (equivalently) specifications and use cases. A specification is like a “for all”: it tells us what must happen for all envisioned inputs. A test is like a “there exists”: it tells us what happens for a particular input and hence, as in predicate calculus, it is interesting as a disproof mechanism:

- A successful test brings little information (like learning the value for 5 when trying to figure out what a function is, or finding one true value in trying to prove a ∀ or a false value in trying to prove a ∃).

- An unsuccessful test brings us decisive information (like a false value for a ∀): the program is definitely not correct. It skolemizes incorrectness.

A proof, for its part, brings the discussion to an end when it is successful. In practice, testing may still be useful in this case, but only testing that addresses issues not covered by the proof:

- Correctness of the compiler and platform, if not themselves proved correct.

- Correctness the proof tools themselves, since most practical proofs require software support.

- Aspects not covered by the specification such as, typically, performance and usability.

But for the properties it does cover the proof is final.

It is as foolish, then, to use tests in lieu of specifications as it would be to ignore the limitations of a proof. Agile approaches have caused much confusion here; as often happens in the agile literature [2], the powerful insight is mixed up with harmful advice. The insight, which has significantly improved the practice of software development, is that the regression test suite is a key asset of a project and that tests should be run throughout. The bad advice is to ditch upfront requirements and specifications in favor of tests. The property that tests lack and specifications possess is generality. A test is an instance; a thousand tests can never be more than a thousand instances. As I pointed out in a short note in EiffelWorld (the precursor to this blog) a few years ago [3], the relationship is not symmetric: one can generate tests from a specification, but not the other way around.

The same relationship holds between use cases and requirements. It is stunning to see how many people think that use cases (scenarios) are a form of requirements. As requirements they are as useless as one or ten values are to defining a function. Use cases are a way to complement the requirements by describing the system’s behavior in selected important cases. A kind of reality check, to ensure that whatever abstract aims have been defined for the system it still covers the cases known to be of immediate interest. But to rely on use cases as requirements means that you will get a system that will satisfy the use cases — and possibly little else.

When I use systems designed in recent years, in particular Web-based systems, I often find myself in a stranglehold: I am stuck with the cases that the specifiers thought of. Maybe it’s me, but my needs tend, somehow, to fall outside of these cases. Actually it is not just me. Not long ago, I was sitting close to a small-business owner who was trying to find her way through an insurance site. Clearly the site had a planned execution path for employees, and another for administrators. Problem: she was both an employee and the administrator. I do not know how the session ended, but it was a clear case of misdesign: a system built in terms of standard scenarios. Good specification performs an extra step of abstraction (for example using object-oriented techniques and contracts, but this is for another article). Skipping this step means forsaking the principal responsibility of the requirements phase: to generalize from an analysis of the behavior in known cases to a definition of the desired behaviors in all relevant cases.

Once more, as everywhere else in computer science [4], abstraction is the key to solid results that stand the test of time. Definitely better than judging a book by its cover, inferring a function by its first few values, verifying a program by its tests, or specifying a system by its use cases.

References

[1] See e.g. a blog article: Einstein and Eddington, here.

[2] Bertrand Meyer: Agile! The Good, the Hype and the Ugly, 2013, to appear.

[3] Bertrand Meyer: Test or spec? Test and spec? Test from spec!, EiffelWorld column, 2004 available here.

[4] Jeff Kramer: Is Abstraction the Key to Computer Science?, in Communications of the ACM, vol. 50, no. 4, April 2007, pages 36-42, available from CiteSeer here

Informatics Europe

Informatics Europe LASER summer school

LASER summer school Propulsion Academy

Propulsion Academy

Based on Occam’s Razor, the solution x^2 might have been the best solution. Unfortunately, Occam’s Razor is not a good approach to verify software. :-)

[…] Forther reading: A fundamental duality of software engineering by Bertrand […]

Let’s separate several issues: (1) the power of mathematical notations and analysis; (2) the possibility of infallible, top-down rationality; (3) the remaining value of a theory in the face of counterexamples.

Let’s stipulate (1).

Now take (3). Popper’s idea that a counterexample invalidates a theory and requires it to be discarded is too rigid, unnecessary, impractical, and not empirically valid when it comes to describing actual scientific practice. A practical scientist looks to evolve/repair/enhance a theory, progressively, in the face of new information. In this sense, the theory y = x^2 is a reasonable one and a program that computes y = x^2 is ok until such time as additional information requires theory, and program, evolution. Lakatos or someone of his ilk provides a better philosophical frame for agile development than Popper in this sense.

Now for (2). If it could easily have been determined from the outset that a specification (theory) other than y=x^2 was the correct specification, then of course it would have been wasteful to develop a program to the wrong (y=x^2) theory. In practice, however, the biggest challenge is to develop a theory of what is needed in the absence of complete information. In such cases, one is in a position of having to develop a theory — a hypothesis — based on incomplete information. One might reasonably employ abductive reasoning, looking for the “best explanation” for the information that one does have. In this sense, y=x^2 is a pretty reasonable *guess*. One does one’s best to validate the guess, then acts on it, implementing a program that computes y=x^2. If later it becomes clear that (6,34) is a required behavior, well, then one is in the position of having to maintain the software in the face of changed requirements. Perhaps the program has to be discarded entirely. Perhaps significant parts of it (e.g., user interface, choices of language and libraries, etc) can be “saved,” with only some parts being repaired.

To the extent that we actually know precise, detailed, formal specifications from the outset we should use them. In reality we often don’t. In these cases, we guess. We guess a theory. Guessing is a fundamental operation in science. When Feynman was asked how he comes up with his theories, he answers, “I guess.” Some people are better guessers than others, of course, based on experience and intellect. Returning to (3), when our guesses (specifications) and actions we take based on them (implementing programs) turn out not to be completely right, we don’t necessarily start completely over. We adapt. We adjust. We evolve our ideas, our specifications, our programs.

Abductive inference and progressive refinement in the face of evolving knowledge seem to me to be of the essence in all of software engineering, and engineering, in general. Popper is not our guy. The likes of Lakatos, and well before him, Charles Saunders Pierce, provide a better account of, and a better intellectual framework for, software development than old Mr. Popper.

I alluded to Kuhn and you are right that Popper is the other clear reference here (I would also add Bachelard).

While many “Agile” developers fall into the trap you describe, test-driven development (TDD) has always emphasized the goal of creating an executable specification. Also, Agile has never prohibited written specifications or other alternatives, like contracts, as long as there is a reasonable justification for them, e.g., for life-critical software in medical instrumentation and avionics. There is widespread confusion on what Agile “proscribes”.

Recently, many of us have been driving towards a synthesis of TDD and automated specifications that eliminates the instance-based tests you correctly criticize. An example is the QuickCheck tool invented in the Haskell community and now ported to other languages. Design by Contract in various forms is seeing a revival. Behavior-driven development (BDD) is also a step in the right direction, although it doesn’t emphasize “for all” as much as it should.

“the Kuhnian notion of falsifiability…”

It is actually the Popperian notion of falsifiability…

Several people have noted this, thanks. In fact I had both in mind but you are right that Popperian is the more apt characterization and I have corrected the text accordingly.

[…] un reciente comentario, Bertrand Meyer nos confronta con la dualidad entre demostración y ensayos (proof and testing). […]

Thanks for this thought-provoking article. I don’t totally agree with you though.

The proof/test couple you depicted in mathematical terms can only be rightfully compared to the specification/scenario couple in software if :

1) Your software specifications are some kind of complete, elegant and immutable truth, just like a scientific proof.

2) Your domain experts, who (we’ll assume) can think of scenarios for their domain problem, are also able to produce accurate upfront abstract specifications in the first place, just like a mathematician can produce a proof.

I’d argue that these two conditions are not often met in software development. They might be, in the case of software used in science, research or academia. The domain experts will generally have a strong scientific background and already be seasoned crafters of very precise abstract specifications or proofs. Actually, they may well be the ones who program as well (in which case their scientific model or solution will literally be the specification for their program, and their experiments, the testing scenarios).

However, we know the problems enterprise and mainstream applications solve and the needs they address can rarely be posed in hard science terms. They are eminently human, subject to interpretation and will often change due to the ephemeral nature of business requirements. Most of the time, people with the domain knowledge won’t be able to produce good abstract specifications either, let alone think of an all-encompassing scheme in advance. They might not even know if their problems have a computerized solution.

In such environments, it’s a good idea to reverse the process. Tests, use cases or scenarios are one of the best ways to help the domain experts express what they need, because they are just a very natural inspiration for human brains to start thinking and elaborating abstractions upon. Then only the specifications can be materialized in the form of detailed documents, comprehensive diagrams, etc. Or – as Agile acknowledges, they may turn out being so tedious to write down and so much subject to change that we’d be better off doing the minimum necessary amount of them and consider the software itself as the main specification.

So, Agile approaches don’t necessarily state that tests and scenarios *are* the specifications. They *use* tests and scenarios as a human brain-friendly way of helping programmers produce the only valid specification in an ever-changing enterprise world – the code base itself.

[…] here “zero” is “oh”. PPS: inspired by Bertrand Meyer’s Fundamental Duality in Software Engineering Share:TwitterLike this:LikeBe the first to like […]

We should reverse the terminology, and say that a test failed when it detected no inconsistency, and that it was successful when it detected an inconsistency between what was expected and what was obtained.