Efficiency

Least Recently Used is a popular eviction policy due to its simplicity and good hit rate in common scenarios. However, in typical workloads LRU is not optimal and may have a poor hit rate in cases like full scans. The following sections compare the best alternatives after an extensive evaluation. For brevity only the top three performing O(1) eviction policies are discussed. This excludes Clock based policies that trade O(n) worst case time complexity in order to be more amenable to concurrent implementations.

Caffeine uses the Window TinyLfu policy due to its high hit rate and low memory footprint.

ARC uses a queue for items seen once, a queue for items seen multiple times, and non-resident queues for evicted items that are being monitored. The maximum size of the queues is adjusted dynamically based on the workload pattern and effectiveness of the cache.

The policy is simple to implement, but requires that the cache size is doubled in order to retain evicted keys. It is also patented and cannot be used without a license agreement with IBM.

LIRS organizes blocks by their inter-reference recency (IRR) and groups entries as either having a low (LIR) or high (HIR) inter-reference recency. A LIR entry is preferable to retain in the cache and evicted HIR entries may be retained as non-resident HIR entries. This allows a non-resident HIR entry to be promoted to a LIR entry shortly after a cache miss.

The policy is complicated to implement and only achieves its maximum efficiency when the cache size is tripled in order to retain evicted keys.

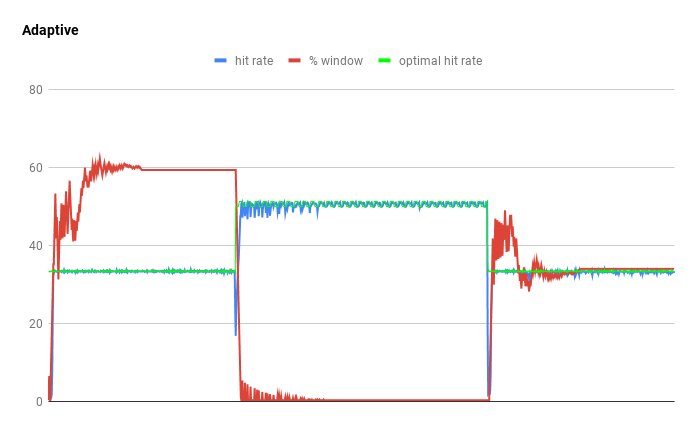

W-TinyLfu uses a small admission LRU that evicts to a large Segmented LRU if accepted by the TinyLfu admission policy. TinyLfu relies on a frequency sketch to probabilistically estimate the historic usage of an entry. The window allows the policy to have a high hit rate when entries exhibit recency bursts which would otherwise be rejected. The size of the window vs main space is adaptively determined using a hill climbing optimization. This configuration enables the cache to estimate the frequency and recency of an entry with low overhead.

This implementation uses a 4-bit CountMinSketch, growing at 8 bytes per cache entry to be accurate. Unlike ARC and LIRS, this policy does not retain evicted keys.

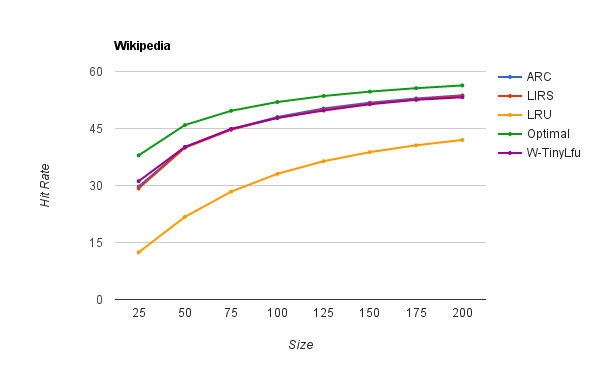

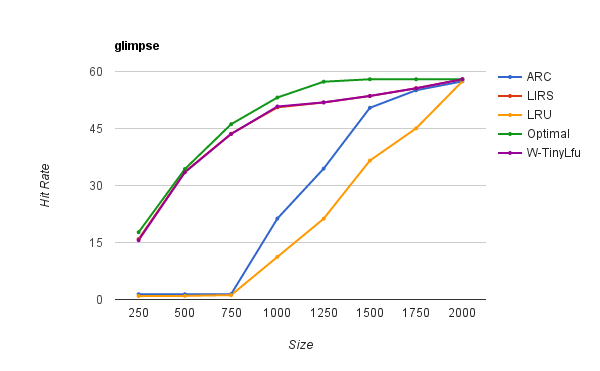

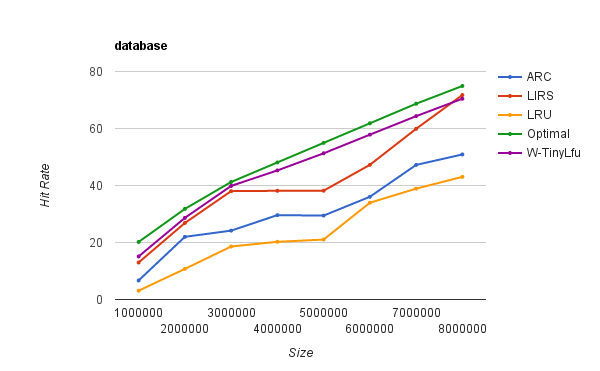

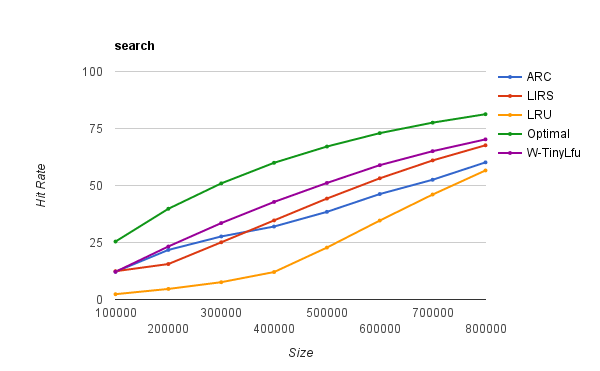

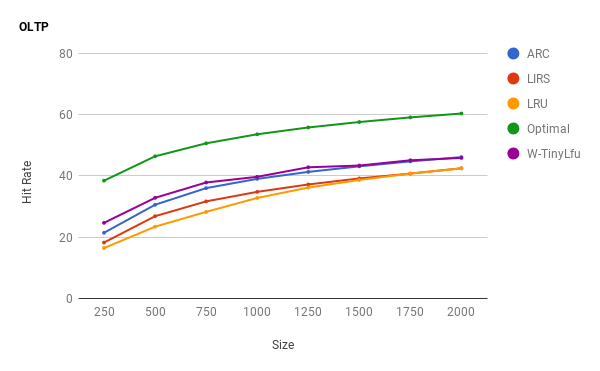

The eviction policies are compared against Bélády's optimal for the theoretical upper bound. A subset of the traces evaluated are described to provide a range of workloads.

WikiBench provides a trace of 10% of all user requests issued to Wikipedia.

This trace is provided by the authors of the LIRS algorithm and exhibits a looping access pattern.

This trace is provided by the authors of the ARC algorithm and is described as "a database server running at a commercial site running an ERP application on top of a commercial database."

This trace is provided by the authors of the ARC algorithm and is described as "disk read accesses initiated by a large commercial search engine in response to various web search requests."

This trace is provided by the authors of the ARC algorithm and "contains references to a CODASYL database for a one hour period."

This workload shifts between a recency-skewed trace (Corda) and a frequency-skewed trace (5x Lirs' loop). This demonstrates W-TinyLfu's ability to reconfigure itself.

Window TinyLfu provides a near optimal hit rate and is competitive with ARC and LIRS. It does so while remaining simple, does not require non-resident entries, and has a low memory footprint. The policy provides a substantial improvement to LRU across a variety of workloads, making it a good choice for a general purpose cache.