According to his official biographer, Steve Jobs went ballistic in January 2010 when he saw HTC's newest Android phones. "I want you to stop using our ideas in Android," Jobs reportedly told Eric Schmidt, then Google's CEO. Schmidt had already been forced to resign from Apple's board, partly due to increased smartphone competition between the two companies. Jobs then vowed to "spend every penny of Apple's $40 billion in the bank to right this wrong."

Jobs called Android a "stolen product," but theft can be a tricky concept when talking about innovation. The iPhone didn't emerge fully formed from Jobs's head. Rather, it represented the culmination of incremental innovation over decades—much of which occurred outside of Cupertino.

Innovation within multitouch and smartphone technology goes back decades—the first multitouch devices were created in the 1980s—and spans a large number of researchers and commercial firms. It wouldn't have been possible to create the iPhone without copying the ideas of these other researchers. And since the release of Android, Apple has incorporated some Google ideas into iOS.

You can call this process plenty of names, some less than complimentary, but consumers generally benefit from the copying within the smartphone market. The best ideas are quickly incorporated into all the leading mobile platforms.

The current legal battles over smartphones are a sequel to the "look and feel" battle over the graphical user interface (GUI) in the late 1980s. Apple lost that first fight when the courts ruled key elements of the Macintosh user interface were not eligible for copyright protection. Unfortunately, in the last 20 years, the courts have made it much easier to acquire software patents. Apple now has more powerful legal weapons at its disposal this time around, as do its competitors. Together, there's a real danger that the smartphone wars will end by stifling competition.

Multitouch in the lab

High-tech innovations are often developed by laboratory researchers long before they're introduced into the commercial market. Multitouch computing was no exception. According to Bill Buxton, a multitouch pioneer now at Microsoft Research, the first multitouch screen was developed at Bell Labs in 1984. Buxton reports that the screen, created by Bob Boie, "used a transparent capacitive array of touch sensors overlaid on a CRT." It allowed the user to "manipulate graphical objects with fingers with excellent response time."

In the two decades that followed, researchers experimented with a variety of techniques for building multitouch displays. A 1991 Xerox PARC project called the "Digital Desk" used a projector and camera situated above an ordinary desk to track touches. A multitouch table called the DiamondTouch also used an overhead projector, but its touch sensor ran a small amount of current through the user's body into a receiver in the user's chair. NYU researcher Jeff Han developed a rear-projection display that achieved multitouch capabilities through a technique called "frustrated total internal reflection."

While they refined multitouch hardware, these researchers were also improving the software that ran on it. One of the most important areas of research was developing a vocabulary of gestures that took full advantage of the the hardware's capabilities. The "Digital Desk" project included a sketching application that allowed images to be re-sized with a "pinch" gesture. A 2003 article by researchers at the University of Toronto described a tabletop touchscreen system that included a "flick" gesture to send objects from one user to another across the table.

By February 2006, Han brought a number of these ideas together to create a suite of multitouch applications that he presented in a now-famous TED talk. He showed off a photo-viewing application that used the "pinch" gesture to re-size and rotate photographs; it included an on-screen keyboard for labeling photos. He also demonstrated an interactive map that allowed the user to pan, rotate, and zoom with dragging and pinching gestures similar to those used on modern smartphones.

Commercializing multitouch

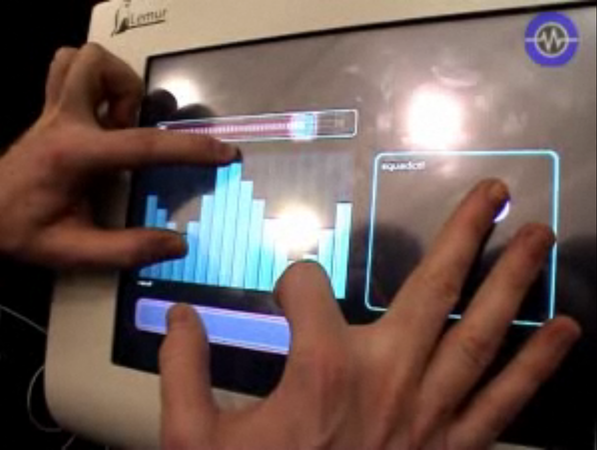

In 2004, a French firm called Jazzmutant unveiled the Lemur, a music controller many consider the world's first commercial multitouch product. The Lemur could be configured to display a wide variety of buttons, sliders, and other user interface elements. When these were manipulated, the device would produce output in the MIDI-like Open Sound Control format. It debuted in 2005 and cost more than $2,000.

The market for the Lemur was eventually undercut by the proliferation of low-cost tablet computers like the iPad. But Jazzmutant now licenses its multitouch technology under the name Stantum. It raised $13 million in funding in 2009.

Jeff Han also moved to commercialize his research, founding Perceptive Pixel in 2006. The firm focuses on building large, high-end multitouch displays and counts CNN among its clients. The DiamondTouch also became a commercial product in 2006.

Microsoft says its researchers have worked on multitouch technologies since 2001. Microsoft's Andy Wilson announced Touchlight, a multitouch technology using cameras and a rear projector, in 2004. Touchlight had an interface reminiscent of Minority Report — a three-dimensional object would be displayed on the screen and the user could rotate and scale it with intuitive hand gestures.

Wilson was also a key figure in developing Microsoft Surface, a tabletop touchscreen system that used a similar combination of a rear-projected display and cameras. According to Microsoft, the hardware design was finalized in 2005. Surface was then introduced as a commercial product in mid-2007, a few months after the iPhone was unveiled. It too used dragging and pinching gestures to manipulate photographs and other objects on the screen.

Another key figure in the early development and commercialization of multitouch technologies was Wayne Westerman, a computer science researcher whose PhD dissertation described a sophisticated multitouch input device. Unlike the other technologies mentioned so far, Westerman's devices weren't multitouch displays; they were strictly input devices. Along with John Elias, Westerman went on to found FingerWorks, which produced a line of multitouch keyboards that were marketed as a way to relieve repetitive stress injuries.

Fingerworks was acquired by Apple in 2005 and Westerman and Elias became Apple employees. Their influence was felt not only in the multitouch capabilities of the iPhone and the iPad, but also in the increasingly sophisticated multitouch capabilities of Mac trackpads.

Touchscreen phones

IBM's Simon, introduced in 1993, is widely regarded as the first touchscreen phone. It had a black-and-white screen and lacked multitouch capabilities, but it had many of the features we associate with smartphones today. Users dialed with a onscreen keypad, and Simon included a calendar, address book, alarm clock, and e-mail functionality. The e-mail app even included the ability to click on a phone number to dial it.

The Simon was not a big hit, but touchscreen phones continued improving. In the early 2000s, they gained color screens, more sophisticated apps, and built-in cameras. They continued to be single-touch devices, and many required a stylus for precise user input. Hardware keypads were standard. These phones ran operating systems from Microsoft, Palm, Research in Motion, and others.

April 2005 saw the release of the Neonode N1m. While lacking the sophistication of the iPhone, it had a few notable features. It was one of the few phones of its generation not to have a hardware keypad, relying almost entirely on software buttons for input. It supported swiping gestures in addition to individual taps. And it employed a "slide to unlock" gesture, almost identical to the one the iPhone made famous.

More sophisticated touchscreen interfaces began to emerge in 2006. In October, Synaptics unveiled the Onyx, a proof-of-concept color touchscreen phone that included a number of advanced features. While it may not have been a true multitouch device, its capacitive touch sensor included the ability to tell the difference between the user's finger and his cheek (allowing someone to answer the phone without worrying about accidental inputs) and to track a finger as it moved across the screen.

The Onyx's phone application had an intuitive conference calling feature, and the device included a music player, an interactive map, and a calendar.

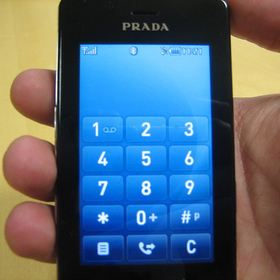

That December, LG announced the LG Prada — beating the iPhone to market by several months. The two devices shared several common features. The Prada dispensed with a traditional keypad, relying on software buttons for most input. It included the ability to play music, browse the Web, view photos, and check e-mail.

The iPhone was finally unveiled in January 2007. LG accused Apple of copying its design, saying it was disclosed in September 2006 in order to compete for an IF Design Award (which it won). The accusation doesn't hold much credibility, however. Although the phones have undeniable similarities, the iPhone features a more sophisticated user interface. For example, the iPhone used the flick-to-scroll gesture now common on smartphones; the LG Prada used a desktop-style scroll bar. The two phones were likely developed independently.

So is Android a stolen product?

reader comments

664