After reading Arstechnica’s excellent article on internet infrastructure and what goes on behind the scene to bring the internet to your home, I have a newfound respect for all the ISPs I have used in the past and the ISP business I am running now. Most of the stuff mentioned in the Ars article is not new to me, but it helps to be reminded in a nice 7000-word article with informative pictures what it takes to bring the internet to your home and how little control you have, as a service provider, to maintain a high quality of service.

Ars promised a follow-up article on last-mile infrastructure, from NOC to your home, but let me tell you from personal experience; the last mile is the hardest and perhaps the most expensive to maintain. There is an insane amount of planning, and attention to detail goes in the background to bring the internet to your home so that you can watch cat gifs.

A little back story.

We started our ISP as sort of a joke, or more like I-have-couple-of-months-to-kill-with-nothing-better-to-do kind of thing. I had zero prior experience in running an ISP. I had some experience in running other businesses, but the experience isn’t easily transferable to running an ISP. My business partner had some experience in working in an ISP industry in a very limited capacity and he is not good with money or good with business in general. The perfect couple and the perfect storm, waiting to happen. We applied for a nationwide ISP license, there aren’t a lot of them (I wonder why) and they are not very expensive to apply, so why not? Surprisingly we got our license without much hassle.

It costs a lot of money to start

Let me highlight some of the requirements and costs associated with running a proper ISP, without going into very specific details, like our location and specific network devices we used. Please keep in mind we are only a two-year-old, medium-sized ISP in a developing world, most of what we do will apply to most of the world, but some of it might not.

NOC and POP

Our NOC (Network Operations Center, where all the magic happens) needed to be located in an area that is easily accessible 24/7 without any restrictions. These kinds of locations are tricky and expensive to find when your business revolves around serving home users (mostly) in residential areas. We found a nice place in the business district with a 10-year lease with provisions to renegotiate after 5 years.

We had to connect to multiple upstream with two redundant physical connectivity (Primary and Backup) and each of these connectivity has to be from different routes. So, we have four upstream bandwidth providers, for each physical connection, we have to painstakingly plan fiber routes so that they don’t overlap. And if there is any kind of problem with one of the cores connecting us to our upstream, let’s say some absent-minded road construction workers accidentally cut our underground fiber cores. We will be pleased to know that it won’t affect any of our other cores. We probably don’t need four upstream, but from our experience, we have learned that to absolutely minimize downtime (which are rare) and bandwidth congestion issues, having multiple upstream is the best way to go (with minimal overlapping peering within themselves). We also needed to research and negotiate with our upstream BW providers so that we get access to most of their network peers. It costs us more money, but it gives our users better speed and low latency to things like gaming servers.

We also needed to have on-premise Google and Akamai CDN. Google has a program where you can apply for their GGC (Google Global Cache) hardware for your premise. They are free to apply and you get 3 nice Dell GGC nodes with a combined throughput of 19gbps, to begin with. But there is a minimum requirement before you can apply for them. Your ASN needs to have at least 2gbps worth of bandwidth requests to Google services before you are eligible for their hardware. But when you are starting out you have no option but to buy expensive BW for youtube requirements. Considering that Youtube traffic is about 2/5th of our total traffic and it can take more than 6 months to reach their minimum requirements, plus another 3 months for nodes to be delivered to our premise, configuring them and start benefitting from their GGC nodes can take another month or so. All this time we are burning real money. We have to sell BW to customers by being competitive with the existing market price which includes the benefit of having an on-premise GCC node, which we obviously didn’t. Once we got our GGC nodes running at maximum efficiency, in a matter of weeks we were almost as competitive as our competition. At the moment, we are in talks to apply for Akamai nodes, but Akamai has a higher barrier to entry and though it won’t save us a lot of money, it will give better service for our customers, which is equally important to us. We are also considering applying for Netflix nodes, but since they just started in our country, I don’t know how accommodating they will be.

We lease underground cores from third parties, cores are expensive and we often have to haggle back and forth to try to reduce the cost of the cores that are used as a backup and which we will probably not use more than once a year, for a couple of hours.

We needed multiple levels of redundancy with power too. We have two power companies in our region but only one of them is directly connected to our office. The other one has presence 3-4 blocks away from us. Through connecting with the second power company had some legal issues, we jumped through some hoops to get connected to them. If you thought last-mile OH (Over Head) fiber was expensive to install and maintain, try doing it for power lines. So in an event of a power failure in our zone, we should still have power available from our backup connectivity. To maintain this backup connectivity is expensive even if you use them once a year, as a business you have to pay a minimum amount to keep connected to them, whether you use them or not. We also have multiple online UPS (with 50% overhead capacity), which can provide us about 20 hours’ worth of power in the event of total power failure from mainlines. We also have two diesel generators, each located on the opposite side of the building, with enough diesel in stock for 2 days of uninterrupted power.

The server room is isolated from the rest of the office in our NOC (naturally), the room needs to be carefully climate-controlled (multiple 24 hours air-condition units) and access controlled through biometric readers and monitored with HD cameras. I am not going to go into specific name brands or product types we use for our edge routers and distribution switches, but they are the usual culprits that most people use out there. It’s not like you have a lot of high-quality options anyways. Mostly Cisco, Juniper, Mikrotik (home user BW control) and some Linux boxes. These switches and routers are ridiculously expensive and you really need to have a drop-in backup for your edge and distribution routers/switches. Not to mention you need a staging lab before deploying new configurations to live network. Since our network is on 10g, LR (long Range) sfp+ modules that work well with Cisco and Juniper devices can be very very expensive, especially in this part of the world where it’s not readily available so we have to keep a lot of spares available all the time.

Ideally, you should have a drop-in NOC available close by so you should be able to switch to your backup NOC, in the case of a massive disaster to your primary NOC. It’s in the back of our minds constantly. The amount of planning and expenses involved with setting it up is ridiculous. Hopefully, we will do it soon, possibly by the end of this year.

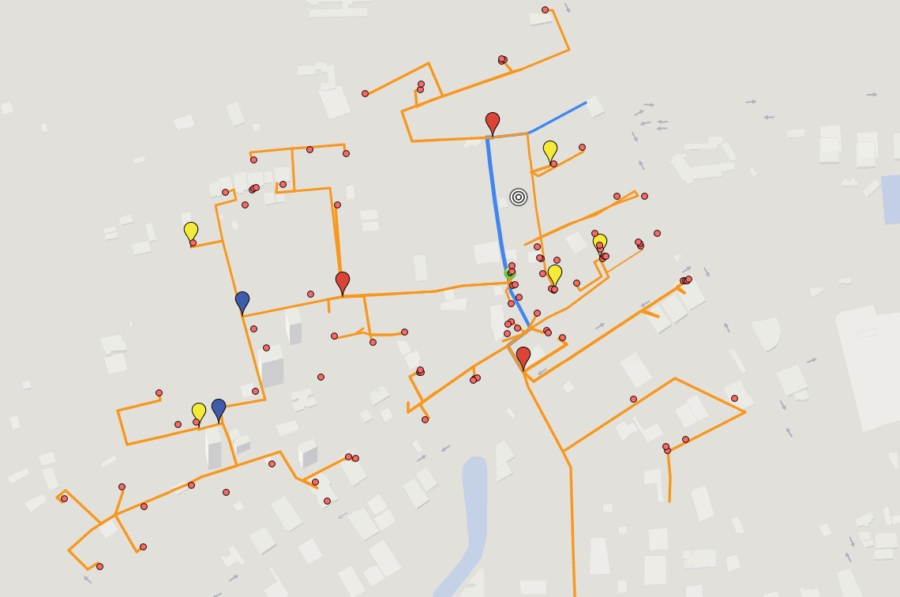

We also have 30+ POPs all over the country with cross-connected ring networks to ensure multiple redundancies. Each of these POPs has similar power redundancy with 24/7 support personal. Our support needed to be very solid. All ISPs sell the same thing that meets 90% of users’ needs, our only differential factor is the quality of customer support. So we have a small army of well paid and well-trained support waiting on standby 24/7. It goes without saying all these costs a decent amount of money.

Support

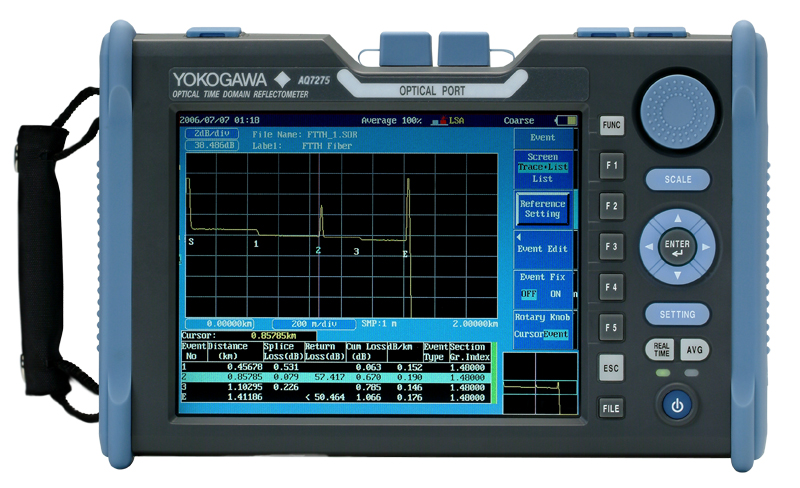

We have three types of support: On the phone, on customer premise (within 12 hours of a call, depending on the client’s availability), and last-mile Fiber Support. We have 100km+ overhead fiber cables, we also have almost double of that distance of underground fiber we leased from third-party providers. This large network of fibers needs constant maintenance almost on a daily basis. Our Fibre support teams are equipped with multiple expensive fiber splicing machines and OTDRs, to help them fix problems as soon as possible. This team is also in charge of last-mile connectivity from our POPs to Home users. Since we are a FTTH (Fiber to the Home) service provider, we need to install a fairly expensive Media Convertor at each customer premise to provide service.

Sales

On the sales and marketing side, our team has to deal with ruthless competition and a saturated market. We are fairly new to ISP business (only 2 years old), most of the existing ISPs has been around for decades. Not only do they have a solid network all around the country they have a name brand that most people can relate to. It’s extremely difficult to sway a customer away from their existing internet connection. Sometimes we have to offer at least a month’s worth of internet free so that customers get time to evaluate and compare the services. When you are starting out, your advertisement cost can be insane. One good thing about being in a developing world is that even though the market is saturated as it stands now, it hasn’t topped out because there is still a market to grow. In our case, it’s mostly out of the big cities and into rural areas where big ISPs didn’t put much investment.

What can go wrong?

You can do everything by the book and make sure not to spare any expenses so that you can squeeze out that extra bit of latency issues and things can still go horribly wrong. For instance, as a developing country, our government and their respective bodies are always working on tweaking regulations to best benefit the users. But most of these decisions don’t take into account how it affects ISP businesses. Long-running businesses can absorb some of the cost of regulation changes but ISPs who are just starting out or hoping to start a business can get easily discouraged. So in the long term, you have less competition in an already difficult sector.

We also have to consider challenges our upstream faces, sometimes their backbones can go down and cause massive network congestions and it can take weeks to fix it, and it’s not even on their hands – as they have to rely on their backbone provider. When a customer calls about having slow internet speed we cannot tell them about upstream backbone issues or estimated time when it will be fixed. Because they don’t care and they want an immediate fix. In this kind of situation, we assure them that the problem will be fixed soon. If we think it will take a long time to get normal service from one of our upstream, we either shift the client to another stable upstream (that’s why we have four of them). Or we take some extra short term BW and move 50% of our clients till things normalize. I know we should be compensated for this loss by our upstream since we have SLA with them, but things are not as simple as they sound. A lot of these SLA can’t be easily enforced with 100% compensation.

As mentioned before, underground cores could go down. Overhead cores could get cut or damaged because of the weather. We could get hit by a very very large and complex DDOS attack and our existing solution might not be able to handle it. Sometimes our upstream just turn off our interface till they/we figure out how to deal with the DDOS. Hardware for DDOS mitigations is very very expensive.

Don’t start an ISP business

So to conclude, I would highly advise against starting your own ISP. Even if you have a lot of money to throw around. The industry average is 4-5 years before you start to see a return on your investment and start making profits. Surprisingly we are at two years and we are already at a profit, not by much, buts its a start. But rest assured we are an edge case. Perhaps because we are industry outsiders and we did some things that normally most ISPs wouldn’t have done it. But it’s a topic for another day and I hope to write about it soon.