Peer review is the process that decides whether your work gets published in an academic journal. It doesn't work very well any more, mainly as a result of the enormous number of papers that are being published (an estimated 1.3 million papers in 23,750 journals in 2006). There simply aren't enough competent people to do the job. The overwhelming effect of the huge (and unpaid) effort that is put into reviewing papers is to maintain a status hierarchy of journals. Any paper, however bad, can now get published in a journal that claims to be peer-reviewed.

The blame for this sad situation lies with the people who have imposed a publish-or-perish culture, namely research funders and senior people in universities. To have "written" 800 papers is regarded as something to boast about rather than being rather shameful. University PR departments encourage exaggerated claims, and hard-pressed authors go along with them.

Not long ago, Imperial College's medicine department were told that their "productivity" target for publications was to "publish three papers per annum including one in a prestigious journal with an impact factor of at least five.″ The effect of instructions like that is to reduce the quality of science and to demoralise the victims of this sort of mismanagement.

The only people who benefit from the intense pressure to publish are those in the publishing industry. Hardly a day passes without a new journal starting. My email inbox is full of invitations to publish in a weird variety of journals. They'll take just about anything. The US National Library of Medicine indexes 39 journals that deal with alternative medicine. They are all "peer-reviewed", but rarely publish anything worth reading. The peer review for a journal on homeopathy is, presumably, done largely by other believers in magic. If that were not the case, these journals would soon vanish.

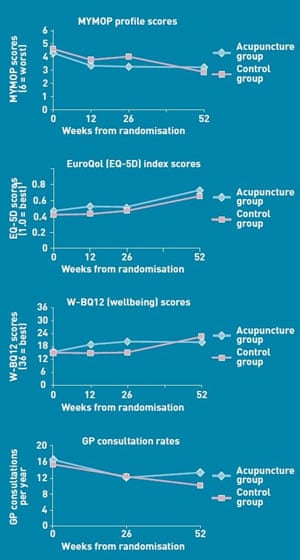

But it isn't only quack journals that have failures in peer review. In June, the British Journal of General Practice published a paper, "Acupuncture for 'frequent attenders' with medically unexplained symptoms: a randomised controlled trial (CACTUS study)". It has lots of numbers, but the result is very easy to see. All you have to do is look at their Figure.

It's obvious at a glance that acupuncture has at best a tiny and erratic effect on any of the outcomes that were measured. The results are indeed quite interesting because they show that acupuncture doesn't even have a perceptible placebo effect. But this is not what the authors said. Their conclusion was: "The addition of 12 sessions of five-element acupuncture to usual care resulted in improved health status and wellbeing that was sustained for 12 months."

How on earth did the group, led by Charlotte Paterson at the Peninsula College of Medicine and Dentistry at Exeter University, manage to reach a conclusion like that? Well, perhaps they were people who are committed to acupuncture and it is common enough for advocates of alternative medicine to ignore evidence, even their own. But the real question is how conclusions like these came to be published in a respectable medical journal that is widely read by GPs. To make matters worse, the journal issued a press release that quotes its editor, Professor Roger Jones DM, FRCP, FRCGP, FMedSci.

"Although there are countless reports of the benefits of acupuncture for a range of medical problems, there have been very few well-conducted, randomised controlled trials. Charlotte Paterson's work considerably strengthens the evidence base for using acupuncture to help patients who are troubled by symptoms that we find difficult both to diagnose and to treat."

The tabloid press had a field day on the basis of the press release. The Daily Mail, for example, reported: "Millions of patients with 'unexplained symptoms' could benefit from acupuncture on the NHS, it is claimed". But there were howls of outrage in the blogosphere, and some choice comments on Twitter. In these days of the citizen journalist, mistakes are soon spotted.

Two months later, the journal published 10 letters that pointed out the problems with the paper. Those problems are so very obvious you'd imagine that the journal would apologise for a failure of the peer review process, and for a press release that misled the public. Anyone can make a mistake, but there was no public apology and no corrected press release.

(Charlotte Paterson and Roger Jones respond to the author's criticisms below.)

So what can be done about scientific publishing? The only service the publishers provide is to arrange for reviews and to print the journals. And for this they charge an exorbitant fee, a racket George Monbiot rightly calls "pure rentier capitalism".

There is an alternative: publish your paper yourself on the web and open the comments. This sort of post-publication review would reduce costs enormously, and the results would be open for anyone to read without paying. It would also destroy the hegemony of half a dozen high-status journals. Everyone wants to publish in Nature, because it's seen as a passport to promotion and funding. The Nature Publishing Group has cashed in by starting dozens of other journals with Nature in the title.

There is just one problem with self-publication and post-publication review. In 2006 Nature magazine tried it and it wasn't popular. Most people who were asked didn't want to take part, and, more important, most people who were invited to comment declined to do so. The probable reason is the exceedingly competitive nature of research in many fields. A junior person might be terrified to criticise a senior person, and senior researchers might similarly be terrified of criticising each other, in case the person criticised was reviewing their next grant. Nevertheless, I suspect this sort of system has to come and there are things that could be done to ameliorate the problems.

First, it would be essential to allow anonymous comments. Most reviewers are anonymous at present, so why not online? Second, the vast flood of papers that make the present system impossible should be stemmed. I'd suggest scientists should limit themselves to an average of two original papers a year. They should also be limited to holding one research grant at a time. Anyone who thought their work necessitated more than this would have to be scrutinised very carefully. It's well known that small research groups give better value than big ones, so that should be the rule.

With far fewer papers being published, reviewers, grant committees and promotion committees might be able to read the papers, not just count them. A report of a parliamentary select committee on peer review concluded:

"We therefore have concerns about the use of journal Impact Factor as a proxy measure for the quality of individual articles. While we have been assured by research funders that they do not use this as a proxy measure for the quality of research or of individual articles, representatives of research institutions have suggested that publication in a high-impact journal is still an important consideration when assessing individuals for career progression."

These politicians show more sense than academics and research funders. My own university's promotion form still says "Candidates may wish to provide impact factors, citation rates or other bibliometric information, where appropriate." Most candidates would interpret that as an instruction to do so.

These proposals all depend on research being honest, but cases of outright fraud do happen. In Andrew Wakefield's case, the fraud linked autism with the MMR vaccine, causing the deaths of children from measles, and we owe a lot to Brian Deer, the journalist who exposed it.

Deer has recently backed a proposal from the House of Commons Science and Technology select committee that an official regulator should be appointed to police science. I don't think this could work. Is the regulator going to repeat experiments, or even check original data, to make sure all is well? In all probability, a regulator would soon degenerate into yet another box-ticking quango, and end up, like the Quality Assurance Agency, doing more harm than good. The way to improve honesty is to remove official incentives to dishonesty.

By and large, the problem does not arise from outright fraud, which is rare. It arises from official pressure to publish when you have nothing to say.

David Colquhoun is professor of pharmacology at University College London. He blogs at DC's Improbable Science

Response on behalf of the CACTUS Study research team

Dr Charlotte Paterson, Peninsula College of Medicine & Dentistry, University of Exeter.

Professor Colquhoun uses the freely available published paper of our CACTUS trial (Classical Acupuncture for Treating Unexplained Symptoms) as an example of "failures in peer review". His contention, that "it has lots of numbers, but the result is very easy to see. All you have to do is look at their figure" does not correspond with how randomised trials such as this are analysed. In table 3 of the paper we present all the data from the study with the results of the standard statistical tests. It is the results of these tests that determine whether the difference between the groups (those that did receive acupuncture in the first 26 weeks and those that didn't) is statistically significant, ie whether it is most unlikely to have occurred by chance alone.

In our case a statistically significant difference in favour of acupuncture was found for the primary outcome measure – a questionnaire called MYMOP that measures a change in individualised health status – and for the wellbeing questionnaire. Hence our conclusion that the addition of 12 sessions of five-element acupuncture to usual care resulted in an improvement in health status and wellbeing.

The graphs in figure 2 (reproduced in Colquhoun's article) are provided for readers who prefer to see findings depicted in this way. They are, however, only useful when accompanied by the title of the figure: "Outcome data over 52 weeks (acupuncture group received acupuncture weeks 0–26, control group received acupuncture weeks 26–52)". With this information (omitted by Colquhoun), your readers can come to their own conclusions about what appeared to happen to each group during the second six months. This is of interest, but is not the basis for our statistical conclusions, and never would be for any trial.

We discuss the strengths and weaknesses of our study in the paper, including the fact that the average benefit was relatively small, but would refute Colquhoun's unsubstantiated suggestion that we "are people committed to acupuncture". This is simply untrue. As for our response to peer review, this is a process that enabled us to improve the paper, and for our response to the debate in the journal letter pages, we refer you to the August issue of the British Journal of General Practice where it is published.

Unfortunately, the voice of patients and the public have been largely absent from these debates, although the same issue of the journal includes our paper reporting the results of a study in which some of the trial participants were interviewed. This aspect of the study provided additional in-depth information about the patient experience and the findings support the trial results and provide potential explanations and new insights. For example, in addition to perceiving a range of positive effects, some participants appeared to take on a more active role in consultations and self-care.

We found peer review to be helpful and we believe that the statistical findings of the randomised trial, together with the qualitative analysis of the patients' perspectives, provides doctors and patients with robust and useful information for making decisions about treatment.

Roger Jones, editor of the British Journal of General Practice

David Colquhoun's critique of my journal's peer review and editorial processes is based on a single table lifted from the main research paper, in which the detailed numerical data tell a somewhat different story, rendering his analysis partial and his conclusions specious.

Paterson and colleagues' paper was reviewed on two separate occasions by two expert statisticians, and read by me. The British Journal of General Practice operates an open peer review system, in which the identities of the authors and reviewers are known to each other. The paper was initially rejected, with re-submission offered if the authors could deal with numerous methodological and some presentational issues in their manuscript.

The lack of "attention controls" – which mimic time spent talking and listening to patients – was pointed out, although of course this was a pragmatic, rather than an explanatory randomised controlled trial (RCT). The re-submitted paper was judged to be much improved, although one reviewer still had concerns about the effect size of the intervention (acupuncture). I decided to publish the paper because it reported a well-designed and well conducted RCT in a difficult area of practice: the subjects were patients with unexplained symptoms for which traditional medicine seemed to have little to offer. It was accompanied by a qualitative evaluation of patients' experiences in the same trial, and we also carried an editorial on acupuncture by academic colleagues from Hong Kong.

Publication was rapidly followed by a series of unpleasant and personally vindictive emails and blog comments from Colquhoun, which I was able to discuss at a meeting of the journal's editorial board a couple of weeks later. The board endorsed the working of the peer review process and saw no reason to retract the paper. They were concerned that the results were presented in an overly positive way in the journal, for which I take full responsibility. The next available print issue of the journal contained all the letters we had received about the paper, a note from me about the editorial board meeting and a response from the authors. We later took the unusual step of providing free access to the paper and the associated correspondence for non-subscribers.

Whether or not this episode represents a failure of peer review – and I don't think it does – it has nothing to do with the argument against the principle of peer review. Colquhoun's response, however, represents a failure of post-publication review. It seems to me that his animus derives much more from a profound antipathy towards the subject matter of this research than from a concern about the scientific methods used to investigate it. I find his hectoring communicative style unpersuasive and inappropriate and, if this is a vision of post-publication review in the future, God help us.